Modeling Spatial Integration Changes in Autism

Sarah Vassall

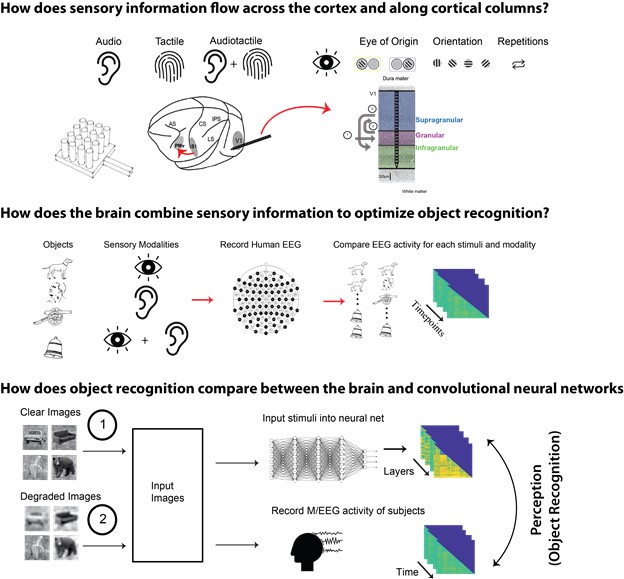

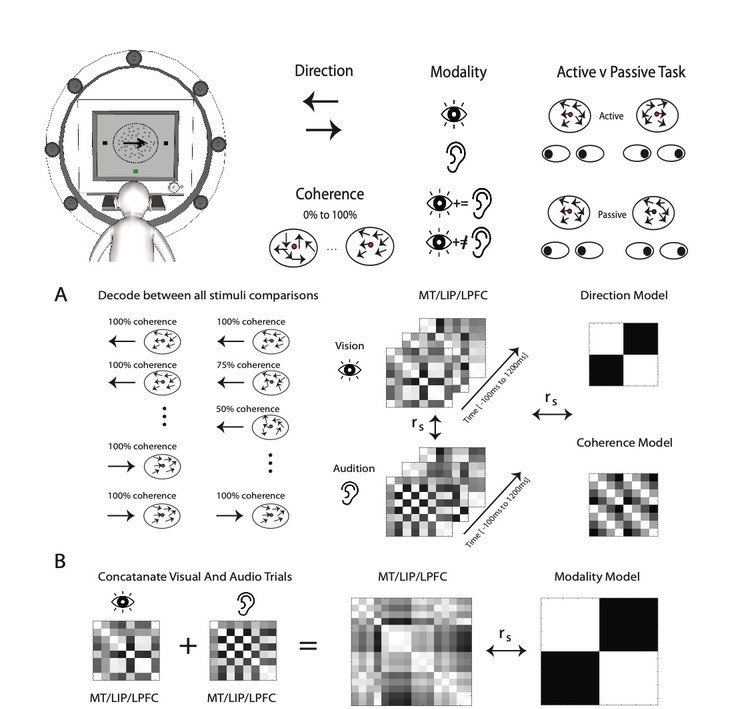

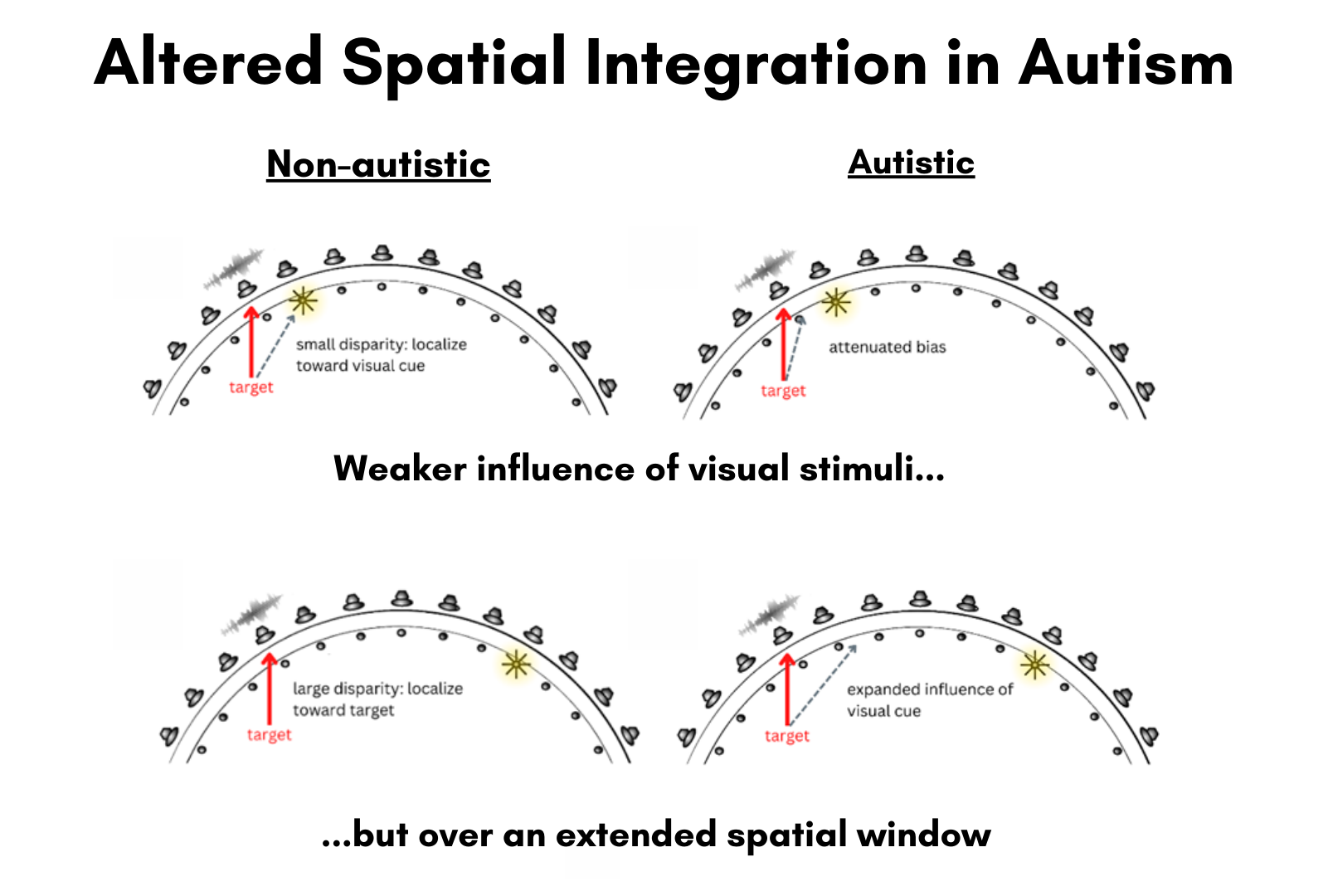

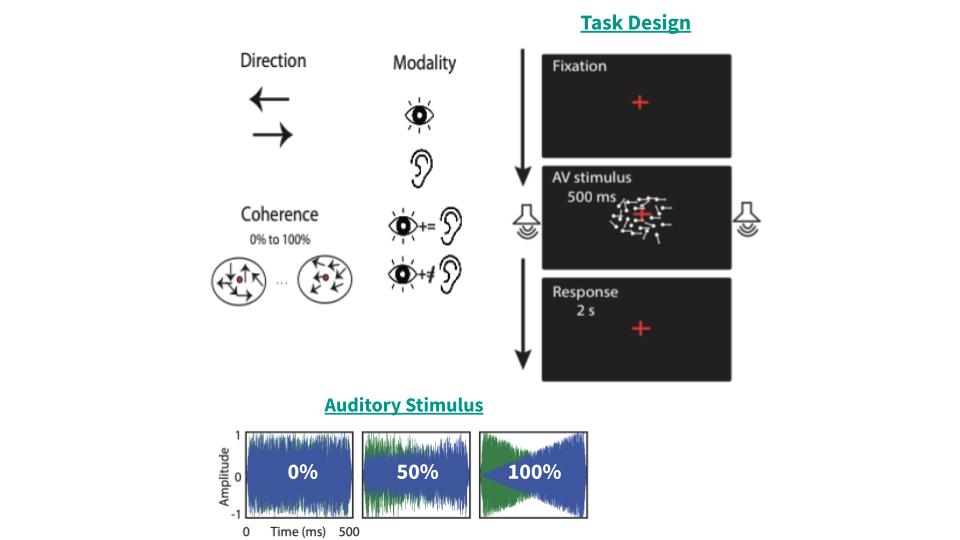

Our world is inherently multisensory, and the full picture of our environment emerges when we are able to effectively combine information from across senses. Over a decade of research has shown that in autistic individuals, however, multisensory integration and sensory function are altered, contributing to presentation of core and associated clinical features. Importantly, however, audiovisual spatial integration - combining sights and sounds with different spatial locations (e.g., tone of voice and facial expression) - has not been characterized in autism.

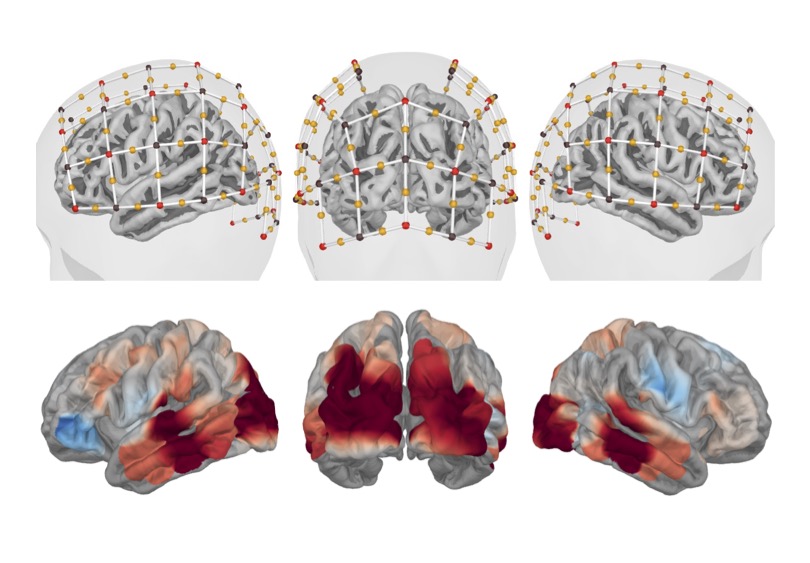

The goal of my research is to elucidate the relationship between audiovisual spatial integration abilities and clinical assessment scales in order to better understand how autistic children represent audiovisual space, and how changes to these representations might contribute to important multisensory activities such as language development, speech comprehension, and social engagement. Further, I aim to understand how electrophysiological differences contribute to differences in spatial representation, and whether we may develop models of sensory-elicited neural activity in autism to identify key brain-behavior relationships in this population.